Building a JavaScript Testing Framework

In the 2000s, when I was a teenager building web apps and browser games, I built most things from scratch with PHP and JavaScript. One reason was that I didn’t want to use any framework or library I didn’t understand, and the other reason was that things I didn’t understand felt like magic. I have since resigned to the fact that I cannot possibly understand every system.

However, I spent time understanding two systems deeply: JavaScript test frameworks and bundlers. Next to compilers and type-checkers, they are among the fundamental tools that make Front End Developers effective, yet people rarely have time to learn how these tools work. This post is an approachable guide to understand test frameworks. In the end, you’ll have a toy test framework, and you’ll understand the fundamental concepts behind a test runner.

You can consume this post as a live coding video attached to this post, and you can listen to the companion podcast with more background information about building a testing framework. The post, video and podcast contain different and unique content, and you can pick the one that suits you the best, or you can consume all of them for the full experience.

This post is part of a series about understanding JavaScript infrastructure. Here is where we are at:

- Dependency Managers Don’t Manage Your Dependencies

- Rethinking JavaScript Infrastructure

- Building a JavaScript Testing Framework (you are here)

- Building a JavaScript Bundler

Building a Testing Framework

If you look at Jest, you’ll notice it consists of 50 packages. For our basic test framework, we’ll leverage some of these. By using Jest’s packages we can both get an understanding of the architecture of a test framework as well as learn about how we can use these packages for other purposes. If you’d like to learn more about how Jest is architected, I highly recommend checking out this Jest Architecture video.

We’ll call our basic test framework best. Let’s break down the functionality of a test framework into multiple steps:

- Efficiently search for test files

- Run all the tests in parallel

- Use an assertion framework

- Isolate tests from each other

You’ll need Node.js 14+ if you are following along because we’ll make best effort to use ES modules and .mjs files. Let’s get started by initializing an empty project and generating a few test files:1

bash

Efficiently search for test files

Our first task is to find all the relevant test files in our project. As you see, we just installed two JavaScript packages to handle this. Thanks to Orta Therox you can hover over all code examples to get type information, making it easier to follow along. If you have written a tool with Node.js, this will look familiar:

index.mjsjavascriptglob from 'glob';consttestFiles =glob .sync ('**/*.test.js');console .log (testFiles ); // ['tests/01.test.js', 'tests/02.test.js', …]

If you run this using node index.mjs, it’ll print the tests in our project, great! Jest itself uses a package called jest-haste-map. The word haste-map comes from Facebook internal lore and no longer applies, but apart from that it is a powerful package to analyze projects and retrieve a list of files within it. Let’s start from scratch with a different approach:

index.mjsjavascriptcpus } from 'os';import {dirname } from 'path';import {fileURLToPath } from 'url';importJestHasteMap from 'jest-haste-map';// Get the root path to our project (Like `__dirname`).constroot =dirname (fileURLToPath (import.meta .url ));consthasteMapOptions = {extensions : ['js'],maxWorkers :cpus ().length ,name : 'best-test-framework',platforms : [],rootDir :root ,roots : [root ],};// Need to use `.default` as of Jest 27./** @type {JestHasteMap} */consthasteMap = newJestHasteMap .default (hasteMapOptions );// This line is only necessary in `jest-haste-map` version 28 or later.awaithasteMap .setupCachePath (hasteMapOptions );const {hasteFS } = awaithasteMap .build ();consttestFiles =hasteFS .getAllFiles ();console .log (testFiles );// ['/path/to/tests/01.test.js', '/path/to/tests/02.test.js', …]

Ok, so we just wrote a lot more code to do the exact same thing as before. Why? While we likely don’t need jest-haste-map for best, this is a useful package for analyzing and operating on large projects:

- Crawls the entire project, extracts dependencies and analyzes files in parallel across worker processes.

- Keeps a cache of the file system in memory and on disk so that file related operations are fast.

- Only does the minimal amount of work necessary when files change.2

- Watches the file-system for changes, useful for building interactive tools.

If you’d like to learn more, check out this inline code comment and play with jest-haste-map’s options. Let’s go back to our code example from before

index.mjsjavascripthasteMapOptions = {extensions : ['js'], // Tells jest-haste-map to only crawl .js files.maxWorkers :cpus ().length , // Parallelizes across all available CPUs.name : 'best-test-framework', // Used for caching.platforms : [], // This is only used for React Native, leave empty.rootDir :root , // The project root.roots : [root ], // Can be used to only search a subset of files within `rootDir`.};// Need to use `.default` as of Jest 27./** @type {JestHasteMap} */consthasteMap = newJestHasteMap .default (hasteMapOptions );// This line is only necessary in `jest-haste-map` version 28 or later.awaithasteMap .setupCachePath (hasteMapOptions );// Build and return an in-memory HasteFS ("Haste File System") instance.const {hasteFS } = awaithasteMap .build ();

There is just one thing we need to fix: We built a virtual filesystem of all .js files, so we need to apply a filter to limit ourselves to .test.js files. This is where jest-haste-map shines as we can apply a set of globs to the in-memory representation of the file system instead of running actual file system operations:

index.mjsjavascripttestFiles =hasteFS .matchFilesWithGlob (['**/*.test.js']);

We finished our first requirement: ✅ Efficiently search for test files on the file system.

Run all the tests in parallel

Let’s move on to running tests in parallel. First, let’s read all of the code in our test files:

index.mjsjavascriptfs from 'fs';awaitPromise .all (Array .from (testFiles ).map (async (testFile ) => {constcode = awaitfs .promises .readFile (testFile , 'utf8');console .log (testFile + ':\n' +code );}),);

This will print all files with their contents, and it even parallelizes the work. Parallelizes? Well, not quite. It uses async/await but in JavaScript everything runs in a single thread. This means that if we ran the tests in the same loop, they wouldn’t run concurrently. If we want to build a fast test framework, we need to use all available CPUs.3 Node.js recently received support for worker threads which allows to parallelize work across threads within the same process. It’s requires some boilerplate so instead we’ll make use of the jest-worker package by running yarn add jest-worker. Next to our index file, we also need a separate module that knows how to execute a test in a worker process. Let’s create a new file worker.js:4

worker.jsjavascriptfs =require ('fs');/** @type {(testFile: string) => string} */exports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');returntestFile + ':\n' +code ;};

Now, we could use this directly in our index.mjs file instead of the loop we had earlier:

index.mjsjavascriptrunTest } from './worker.js';awaitPromise .all (Array .from (testFiles ).map (async (testFile ) => {console .log (awaitrunTest (testFile ));}),);

But that doesn’t yet parallelize anything. We’ll need to set up the connection between the index and the worker file:

index.mjsjavascriptjoin } from 'path';import {Worker } from 'jest-worker';constworker = newWorker (join (root , 'worker.js'));awaitPromise .all (Array .from (testFiles ).map (async (testFile ) => {consttestResult = awaitworker .runTest (testFile );console .log (testResult );}),);worker .end (); // Shut down the worker.

If you run this, best will create multiple processes and run our code in worker.js in parallel using all available CPUs. You can verify this by changing the return value of our runTest function like below, which will print a different id for each worker process:5

worker.jsjavascriptexports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');return `worker id: ${process .env .JEST_WORKER_ID }\nfile: ${testFile }:\n${code }`;};

However, we were just talking about worker threads and jest-worker is creating processes, not running code in different threads. Let’s enable worker threads instead:

index.mjsjavascriptworker = newWorker (join (root , 'worker.js'), {enableWorkerThreads : true,});

Awesome, with only about 30 lines of code we built a program that finds all test files and processes them in parallel. We therefore completed our second requirement: ✅ Run all the tests in parallel. Here is all the code we have written so far:

index.mjsjavascriptfs from 'fs';import {cpus } from 'os';import {dirname ,join } from 'path';import {fileURLToPath } from 'url';importJestHasteMap from 'jest-haste-map';import {Worker } from 'jest-worker';// Get the root path to our project (Like `__dirname`).constroot =dirname (fileURLToPath (import.meta .url ));consthasteMapOptions = {extensions : ['js'],maxWorkers :cpus ().length ,name : 'best-test-framework',platforms : [],rootDir :root ,roots : [root ],};// Need to use `.default` as of Jest 27./** @type {JestHasteMap} */consthasteMap = newJestHasteMap .default (hasteMapOptions );// This line is only necessary in `jest-haste-map` version 28 or later.awaithasteMap .setupCachePath (hasteMapOptions );const {hasteFS } = awaithasteMap .build ();consttestFiles =hasteFS .matchFilesWithGlob (['**/*.test.js']);/** @type {Worker & {runTest: (string) => {success: boolean, errorMessage: string | null}} */constworker = newWorker (join (root , 'worker.js'), {enableWorkerThreads : true,});awaitPromise .all (Array .from (testFiles ).map (async (testFile ) => {consttestResult = awaitworker .runTest (testFile );console .log (testResult );}),);worker .end ();

worker.jsjavascriptfs =require ('fs');/** @type {(testFile: string) => string} */exports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');returntestFile + ':\n' +code ;};

package.jsonjson

Use an assertion framework

Alright, we made it pretty far already but we aren’t actually running tests, we just built a distributed file reader. Wait a minute… that sounds familiar. Didn’t we say that jest-haste-map analyzes files on the file system? Yes it does, and it uses jest-worker under the hood for parallelization. On a modern SSD, reading files in parallel across threads or processes is much faster than doing it in a single process. How convenient!

Alright, back to building our test runner best. How do we actually run our tests? Let’s start by using eval:

worker.jsjavascriptfs =require ('fs');/** @type {(testFile: string) => string} */exports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');eval (code );};

When we run our test framework it will now immediately crash with “ReferenceError: expect is not defined”. Let’s add a guard to the eval call so that a single failing test cannot bring down our whole test framework:

worker.jsjavascripteval (code );} catch (error ) {// Something went wrong.}

Running this will report “undefined” for each test. It’s time that we start thinking about reporting test results from the worker process to the parent process. Let’s define a testResult structure:

worker.jsjavascriptexports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');/** @type {{success: boolean, errorMessage: string | null}} */consttestResult = {success : false,errorMessage : null,};try {eval (code );testResult .success = true;} catch (error ) {testResult .errorMessage =error .message ;}returntestResult ;};

With this our test framework will execute all tests and report the success or failure of each. It’s time to build out our assertion framework. Let’s start with a basic expect(…).toBe matcher just above our invocation of eval:

worker.jsjavascriptexpect = (received ) => ({toBe : (expected ) => {if (received !==expected ) {throw newError (`Expected ${expected } but received ${received }.`);}return true;},});eval (code );

Wow, that’s it: with just two nested functions in our custom expect implementation, we have a fast parallelized test framework that reports the success or failure status of our test framework. This works because eval has access to the scope around it. Can you add new assertions like toBeGreaterThan, toContain, or stringContaining and make use of them in your test files?6

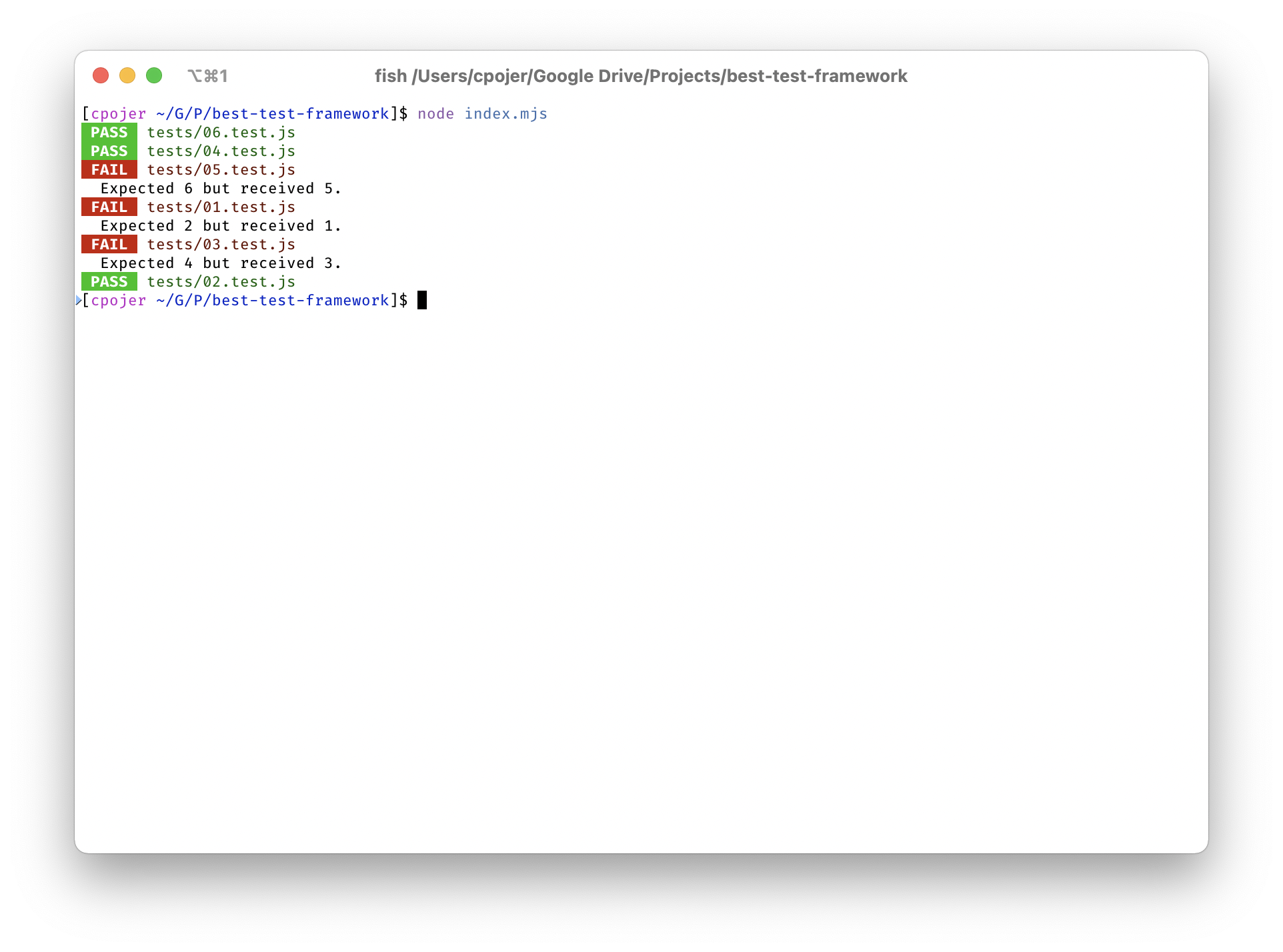

Once you are done playing with assertions, let’s spend some time improving the output of best. Here is what currently happens when running node index.mjs:

json

We can’t really see which tests succeeded and which tests failed. I think it is time to add some of the iconic Jest output. Let’s yarn add chalk and modify our test loop and re-run all tests:

index.mjsjavascriptrelative } from 'path';importchalk from 'chalk';awaitPromise .all (Array .from (testFiles ).map (async (testFile ) => {const {success ,errorMessage } = awaitworker .runTest (testFile );conststatus =success ?chalk .green .inverse .bold (' PASS '):chalk .red .inverse .bold (' FAIL ');console .log (status + ' ' +chalk .dim (relative (root ,testFile )));if (!success ) {console .log (' ' +errorMessage );}}),);

We did it, we built Jest… ehh best!7 But let’s not get ahead of ourselves, there is still something we can do to improve our assertions. Let’s run yarn add expect, which is yet another package part of the Jest framework, and change our worker.js file like this:

worker.jsjavascriptfs =require ('fs');constexpect =require ('expect');/** @type {(testFile: string) => string} */exports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');consttestResult = {success : false,errorMessage : null,};try {eval (code );testResult .success = true;} catch (error ) {testResult .errorMessage =error .message ;}returntestResult ;};

When we are executing our test framework again, we are now using the exact same code that Jest uses for assertions. Not convinced yet? Run yarn add jest-mock, add const mock = require('jest-mock'); to the top of worker.js and create a new test file like this:

tests/mock.test.jsjavascriptfn =mock .fn ();expect (fn ).not .toHaveBeenCalled ();fn ();expect (fn ).toHaveBeenCalled ();

Upon invoking node index.mjs, jest-haste-map will recognize the newly added file, execute our test, and report it as passing. We now have seven tests and it is getting a bit hard to identify the test we care about in the output. Let’s quickly add test filtering via the command line:

index.mjsjavascripttestFiles =hasteFS .matchFilesWithGlob ([process .argv [2] ? `**/${process .argv [2]}*` : '**/*.test.js',]);

Now you can run node index.mjs mock.test.js and it will only run the tests matching the pattern.8 While we are correctly printing the test results, we are not exiting the process with the right failure code. Let’s keep track of whether tests have failed and set process.exitCode in case something went wrong:

index.mjsjavascripthasFailed = false;awaitPromise .all (Array .from (testFiles ).map (async (testFile ) => {const {success ,errorMessage } = awaitworker .runTest (testFile );conststatus =success ?chalk .green .inverse .bold (' PASS '):chalk .red .inverse .bold (' FAIL ');console .log (status + ' ' +chalk .dim (relative (root ,testFile )));if (!success ) {hasFailed = true; // Something went wrong!console .log (' ' +errorMessage );}}),);worker .end ();if (hasFailed ) {console .log ('\n' +chalk .red .bold ('Test run failed, please fix all the failing tests.'),);// Set an exit code to indicate failure.process .exitCode = 1;}

✅ Step three, using an assertion framework for writing tests and reporting results was accomplished with two more Jest packages, expect and jest-mock. Before we move on, something is bothering me and you might have noticed: our tests only have assertions in them, but usually test frameworks have grouping methods like describe and it. Create the following file:

tests/circus.test.jsjavascriptdescribe ('circus test', () => {it ('works', () => {expect (1).toBe (1);});});describe ('second circus test', () => {it (`doesn't work`, () => {expect (1).toBe (2);});});

Let’s extend our test framework so that it can handle these blocks and print the full test name in the case of a failure. We are now technically separating the definition of tests from actually executing them. Let’s update our test runner to include basic implementations of describe and it:

worker.jsjavascriptdescribeFns = [];/** @type {() => void} */letcurrentDescribeFn ;/** @type {(name: string, fn: () => void) => void} */constdescribe = (name ,fn ) =>describeFns .push ([name ,fn ]);/** @type {(name: string, fn: () => void) => void} */constit = (name ,fn ) =>currentDescribeFn .push ([name ,fn ]);eval (code );testResult .success = true;} catch (error ) {// …}

When running our new test via node index.mjs circus it will wrongly claim that the test passes. This is because we aren’t executing any assertions right now, we are just keeping track of the calls to describe. After eval(code);, we need to actually run our describe and it functions:

worker.jsjavascripttestName ; // Use this variable to keep track of the current test.try {/** @type {Array<[string, () => void]>} */constdescribeFns = [];/** @type {() => void} */letcurrentDescribeFn ;/** @type {(name: string, fn: () => void) => void} */constdescribe = (name ,fn ) =>describeFns .push ([name ,fn ]);/** @type {(name: string, fn: () => void) => void} */constit = (name ,fn ) =>currentDescribeFn .push ([name ,fn ]);eval (code );for (const [name ,fn ] ofdescribeFns ) {currentDescribeFn = [];testName =name ;fn ();currentDescribeFn .forEach (([name ,fn ]) => {testName += ' ' +name ;fn ();});}testResult .success = true;} catch (error ) {testResult .errorMessage =testName + ': ' +error .message ;}

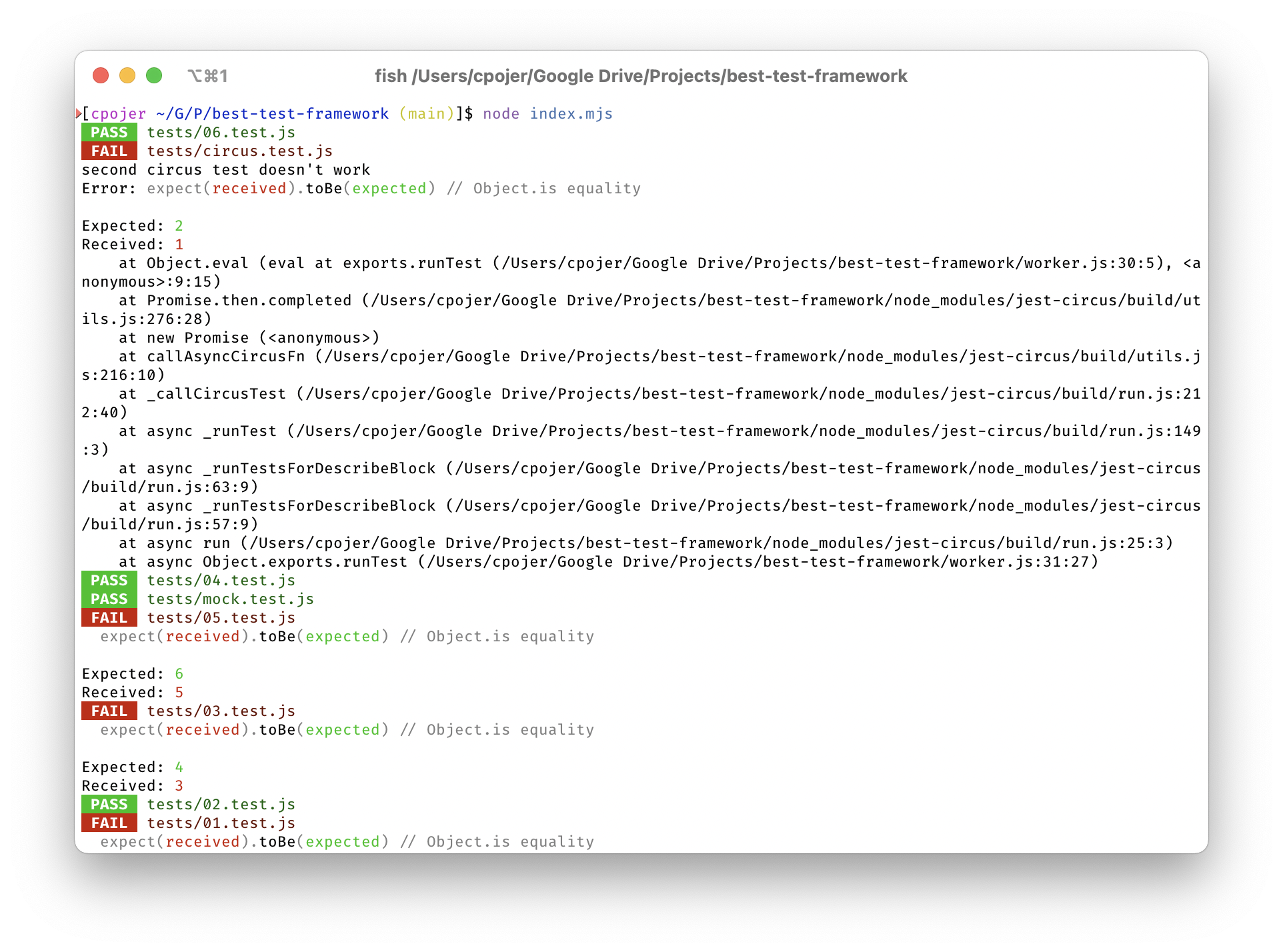

Now we are correctly reporting the name of the test that failed. Upon running node index.mjs circus, our test framework will print second circus test doesn't work: expect(received).toBe(expected). Neat!

A full test runner has the ability to nest describe calls, allows async execution of tests, manages timeouts, reports status and can skip over disabled tests. You know what’s coming, right? Exactly, there is a package called jest-circus that we can integrate to take care of just that. Add it via yarn add jest-circus and let’s refactor our worker:

worker.jsjavascriptfs =require ('fs');constexpect =require ('expect');constmock =require ('jest-mock');// Provide `describe` and `it` to tests.const {describe ,it ,run } =require ('jest-circus');exports .runTest = async function (testFile ) {constcode = awaitfs .promises .readFile (testFile , 'utf8');/** @type {{success: boolean, testResults: Array<{errors: Array<string>, testPath: string}>, errorMessage: string | null}} */consttestResult = {success : false,errorMessage : null,};try {eval (code );// Run jest-circus.const {testResults } = awaitrun ();testResult .testResults =testResults ;testResult .success =testResults .every ((result ) => !result .errors .length );} catch (error ) {testResult .errorMessage =error .message ;}returntestResult ;};

On the receiving side, let’s make use of the newly added information to print the full test names:

index.mjsjavascriptPromise .all (Array .from (testFiles ).map (async (testFile ) => {/** @type {{success: boolean, testResults: Array<{errors: Array<string>, testPath: string}>, errorMessage: string | null}} */const {success ,testResults ,errorMessage } =awaitworker .runTest (testFile );conststatus =success ?chalk .green .inverse .bold (' PASS '):chalk .red .inverse .bold (' FAIL ');console .log (status + ' ' +chalk .dim (relative (root ,testFile )));if (!success ) {hasFailed = true;// Make use of the rich `testResults` and error messages.if (testResults ) {testResults .filter ((result ) =>result .errors .length ).forEach ((result ) =>console .log (// Skip the first part of the path which is an internal token.result .testPath .slice (1).join (' ') + '\n' +result .errors [0],),);// If the test crashed before `jest-circus` ran, report it here.} else if (errorMessage ) {console .log (' ' +errorMessage );}}}),);

This works pretty well but if you are running many tests you’ll notice that state is shared across test files as jest-circus doesn’t reset itself. That’s not great let’s fix it by using the resetState function provided by jest-circus before executing our test code:

javascriptdescribe ,it ,run ,resetState } =require ('jest-circus');// worker.jstry {resetState ();eval (code );const {testResults } = awaitrun ();// […]} catch (error ) {/* […] */}

With less than 100 lines of code we already have all this functionality working:

Isolate tests from each other

With jest-circus we ran into this situation where we could end up sharing state between two tests. We only allocate as many worker processes/threads as we have CPUs available, so if we are running more tests, jest-worker reuses the same worker with different test files. At the same time, we are executing our code with eval, meaning that any code inside of a test can leak and affect other test files. You can try to break best by attaching methods to Array.prototype from within one test and using it from another.9

Luckily Node.js has a vm module that can be used to sandbox code.10 We can use this module to isolate tests from each other and only expose a subset of functionality to test files:

worker.jsjavascriptvm =require ('vm');// replace `eval(code);` with this:constcontext = {describe ,it ,expect ,mock };vm .createContext (context );vm .runInContext (code ,context );

And that’s all, tests will no longer be able to affect each other!11

Let’s try to write an async test:

tests/circus.test.jsjavascriptdescribe ('second circus test', () => {it (`doesn't work`, async () => {await newPromise ((resolve ) =>setTimeout (resolve , 2000));expect (1).toBe (2);});});

This test will fail and report that setTimeout is not defined. This is because the context variable acts as the global variable inside the vm, which means that the sandboxed context has no access to setTimeout, Buffer and various other Node.js features that are only defined at in our main context. Jest uses the jest-environment-node package to provide a Node.js-like environment for tests. Let’s try it via yarn add jest-environment-node:

worker.jsjavascriptcontext = {describe ,it ,expect ,mock };vm .createContext (context );// With this:constNodeEnvironment =require ('jest-environment-node');constenvironment = newNodeEnvironment ({projectConfig : {testEnvironmentOptions : {describe ,it ,expect ,mock },},});vm .runInContext (code ,environment .getVmContext ());

Finally, there is one thing we haven’t had a chance to consider: requiring other files from within our tests. Previously we were using eval, meaning that every import is relative to our worker file and not the test file. Now we are using a vm context, which does not even have a require implementation. A full implementation can get quite complex, but let’s try to approximate one. First, we need to update our test files:

tests/circus.test.jsjavascriptbanana =require ('./banana.js');it ('tastes good', () => {expect (banana ).toBe ('good');});

javascript

If we run the code as is, we’ll learn that “require is not defined“. What we need to do is provide a custom require implementation into our sandbox that can identify the correct file to load and evaluate it. When we were using eval we could do this by defining variables before executing our code. Now we need to actually modify the code we are running to define local variables. A first approach could look like this:

worker.jsjavascriptenvironment ;const {dirname ,join } =require ('path');/** @type {(fileName: string) => any} */constcustomRequire = (fileName ) => {constcode =fs .readFileSync (join (dirname (testFile ),fileName ), 'utf8');returnvm .runInContext (// Define a module variable, run the code and "return" the exports object.'const module = {exports: {}};\n' +code + ';module.exports;',environment .getVmContext (),);};environment = newNodeEnvironment ({projectConfig : {testEnvironmentOptions : {describe ,it ,expect ,mock ,// Add the custom require implementation as a global function.require :customRequire ,},},});

And it works! Our test passes, but what if we want to call require from our banana.js file or include more than one other module?

tests/apple.jsjavascriptmodule .exports = 'delicious';

tests/circus.test.jsjavascriptapple =require ('./apple.js');it ('tastes delicious', () => {expect (apple ).toBe ('delicious');});

We’ll get an error Identifier 'module' has already been declared. It looks like we are still sharing the module code across more than one file. We need to modify our approach to isolate individual modules from each other within a context. How do we usually ensure that variable definitions are only valid for one part of the code? That’s right: we separate our code into functions! This is tricky because we need to somehow share information between the parent and sandbox context without relying on the global scope. If you paid close attention in the above example, vm.runInContext actually gives the last statement back to the parent context, kind of like an implicit return. Let’s make use of that and swap our customRequire function with this function wrapper:

worker.jsjavascriptcustomRequire = (fileName ) => {constcode =fs .readFileSync (join (dirname (testFile ),fileName ), 'utf8');// Define a function in the `vm` context and return it.constmoduleFactory =vm .runInContext (`(function(module) {${code }})`,environment .getVmContext (),);constmodule = {exports : {} };// Run the sandboxed function with our module object.moduleFactory (module );returnmodule .exports ;};

Perfect, we are now able to require multiple modules, and each of them get their own “module”-scope. To provide a proper module and require implementation, we’ll need local state and a custom require implementation for every file that we want to execute in our framework. We also need to handle the full spectrum of the node resolution algorithm. Let’s do one final pass to remove require as a global variable by injecting it at the module-level, as well as making use of our customRequire function for the test file itself to clean everything up a bit. Our final version of worker.js looks like this:

worker.jsjavascriptfs =require ('fs');constexpect =require ('expect');constmock =require ('jest-mock');const {describe ,it ,run ,resetState } =require ('jest-circus');constvm =require ('vm');constNodeEnvironment =require ('jest-environment-node');const {dirname ,basename ,join } =require ('path');/** @type {(testFile: string) => string} */exports .runTest = async function (testFile ) {/** @type {{success: boolean, testResults: Array<{errors: Array<string>, testPath: string}>, errorMessage: string | null}} */consttestResult = {success : false,errorMessage : null,};try {resetState ();/** @type {NodeEnvironment} */letenvironment ;/** @type {(fileName: string) => any} */constcustomRequire = (fileName ) => {constcode =fs .readFileSync (join (dirname (testFile ),fileName ), 'utf8');constmoduleFactory =vm .runInContext (// Inject require as a variable here.`(function(module, require) {${code }})`,environment .getVmContext (),);constmodule = {exports : {} };// And pass customRequire into our moduleFactory.moduleFactory (module ,customRequire );returnmodule .exports ;};environment = newNodeEnvironment ({projectConfig : {testEnvironmentOptions : {describe ,it ,expect ,mock ,},},});// Use `customRequire` to run the test file.customRequire (basename (testFile ));const {testResults } = awaitrun ();testResult .testResults =testResults ;testResult .success =testResults .every ((result ) => !result .errors .length );} catch (error ) {testResult .errorMessage =error .message ;}returntestResult ;};

Advanced Testing

A real test framework has to provide a real require implementation and compile files like TypeScript into something that runs in Node.js. Jest has an entire suite of packages to deal with these requirements, like jest-runtime, jest-resolve and jest-transform. You should now have all the knowledge required to piece these things together and build an awesome test framework! If you have made it this far, consider adding the following features to your test framework:

- Print the name of every

describe/it, including passed tests. - Print a summary of how many tests and assertions passed or failed, and how long the test run took.

- Ensure there is at least one test in a file using

it, and fail the test if there aren’t any assertions. - Inject

describe,it,expectandmockusing a “fake module” that can be loaded viarequire('best'). - Medium: Transform the test code using Babel or TypeScript ahead of execution.

- Medium: Add a configuration file and command line flags to customize test runs, like changing the output colors, limiting the number of worker processes or a

bailoption that exits as soon as one test fails. - Medium: Add a feature to record and compare snapshots.

- Advanced: Add a watch mode that re-runs tests when they change, using

jest-haste-map’swatchoption and listening to changes viahasteMap.on('change', (changeEvent) => { … }). - Advanced: Make use of

jest-runtimeandjest-resolveto provide a full module andrequireimplementation. - Advanced: What would it take to collect code coverage? How can we transform our test code to keep track of which lines of code were run?

With exactly 100 lines of code we built a powerful test framework similar to Jest. You can find the full implementation of the best testing framework on GitHub. While we added a lot of features commonly found in test frameworks, there is a lot more work needed to turn this into a viable testing framework:

- Sequencing tests to optimize performance

- Compiling JavaScript or TypeScript files

- Collect code coverage

- Interactive watch mode

- Snapshot testing

- Run multiple projects in a single test run

- Live reporting and printing a test run summary

- and more…

As you have seen, Jest is not just a testing framework, it’s also a framework for building test frameworks through its 50 packages. In the next post we’ll use our new knowledge to build a JavaScript bundler.

Footnotes

-

If you are impatient, you can find the full source of the best testing framework on GitHub ↩

-

If you are using watchman (recommended for large projects), Jest will ask watchman for changed files instead of crawling the file system. This is very fast even if you have tens of thousands of files. ↩

-

Or better yet: distribute the test runs across several different computers ↩

-

Unfortunately due to a limitation in

jest-worker, we cannot use an ECMAScript module for the worker yet. ↩ -

This works when

jest-workeruses processes for parallelization, but not when it uses worker threads because technically all code is run in the same process. ↩ -

Fun fact: The reason why Jest prints “RUNS” for currently running tests is because it was the only fitting word we could find that was of the same length as “PASS”/“FAIL” to ensure the output stays aligned. ↩

-

If you are passing globs, make sure to escape your input with quotes:

node index.mjs "0*.test.js"↩ -

This may be a bit tricky and depends on how many CPUs you have. If you set the

numWorkersoption for thejest-workerinstance to1, all tests will run in the same process/thread and you should be able to break out of a test file and affect others. ↩ -

As stated in the documentation “the

vmmodule is not a security mechanism. Do not use it to run untrusted code.” ↩ -

Because data is shared directly with the

vmcontext via thecontextvariable, it is still possible to access data types and mess around with the parent context or other tests. This can be partially mitigated by running all code within thevmcontext, but again, do not consider this a secure sandbox. ↩