Dependency Managers Don’t Manage Your Dependencies

I promised to write about tech, so let’s start building our JavaScript infrastructure muscles. In the next several blog posts, I’ll cover introductory topics on dependency management, actionable tips for making your infrastructure better, and guides for building JavaScript infrastructure. We’ll slowly develop our shared understanding so we can talk more deeply about JavaScript infrastructure:

- Dependency Managers Don’t Manage Your Dependencies (you are here)

- Rethinking JavaScript Infrastructure

- Building a JavaScript Testing Framework

- Building a JavaScript Bundler

Dependent on Dependency Managers

JavaScript Dependency Managers such as npm, Yarn, or pnpm have had a tremendous impact on the JavaScript ecosystem’s evolution. They enable the constant search for novel solutions in the front-end space. However, their ease of use and the high modularization of packages come with many downsides. Existing JavaScript dependency managers are actually not very good at managing dependencies. Instead, they are primarily convenient tools to download and extract artifacts with a few task runner capabilities sprinkled in.

This article won’t solve the fundamental problems of package managers. Instead, I’m hoping to provide a definite guide to avoiding black holes on your hard drive1 and gain control over your third-party dependencies.

Many tools will analyze, parse, process or do something with files in a node_modules folder. Adding many large dependencies tends to slow down install times significantly2 and make all operations slower for everyone globally, even if individuals only use a subset of tools in a project. Applying the below methods reduced the size of third-party dependencies within Facebook’s React Native codebase by an order of magnitude, improved install times by an equal factor, and sustained the wins.

Taking Ownership of Dependencies

What would it look like if we took ownership of our dependencies? As discussed in a previous post about Principles of Developer Experience:

[An] example is reducing external dependencies or choosing them more carefully. Yes, third-party dependencies undoubtedly help us move fast initially, but using them means we lose control over our stack. We gain option value and control by removing dependencies or by actively maintaining them.

Concretely, it looks like this:

- Analyze dependencies

- Remove unused dependencies

- Keep dependencies up-to-date

- Clean up duplicated packages

- Align on a single package for a well-defined purpose

- Fork packages when necessary

- Track the number and size of dependencies

This guide primarily focuses on Yarn 1, but many recommendations will equally apply to other package managers. One thing to keep in mind is that some package managers will use symlinks, hard links or other tricky and opaque ways to avoid installing dependencies directly. I have tried some of them and was involved in the creation of another. I have also seen death by a thousand cuts at scale with those solutions. Let’s take it for granted that materialized files on the file system are the only way to go, just like first-party code is stored on a file system and in version control. Alright? Let’s dive in!

Analyze dependencies

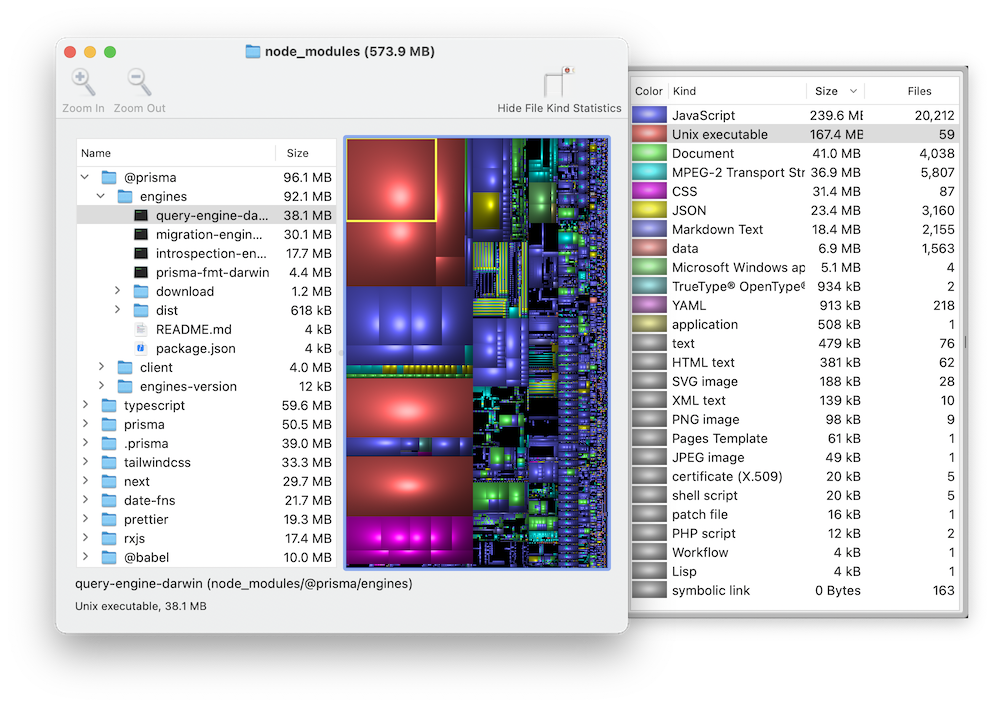

First, we need to get an understanding of what kind of packages are in our node_modules folders. We’ll analyze our dependencies using various methods. I haven’t found a good all-in-one solution to analyze a node_modules folder, so instead, we’ll use four different ones:

- Disk Inventory X and

du -sh ./node_modules/* | sort -nr | grep '\dM.*'3 to analyze the size of dependencies innode_modules. yarn why <packagename>: This command explains why a specific package ended up in your dependency tree. It lists all the other packages that depend on it and which versions they depend on.- Packagephobia: Use it to see roughly how big a package is on disk.

- Bundlephobia: Check how big a package usually is when bundled in an app.

Here is an example of Disk Inventory X analyzing a node_modules folder:4

Remove unused dependencies

It may seem obvious, but many projects carry a lot of dead weight. It’s much easier to add dependencies than it is to remove them. You may have multiple dependencies that you are keeping around and maybe even keep upgrading, even though you aren’t using them. I recommend auditing all existing dependencies listed in a package.json file to see which ones to remove. This analysis is usually a manual process as static analysis tools may miss some dynamic use cases. I recommend using ripgrep or using the search functionality in your editor. Let’s say you’d like to check if you have finished migrating from moment.js to date-fns so you can remove moment. You can run something like this for JavaScript require or import/export statements in your terminal:

bash

If the above doesn’t display any results, I usually check for the exact string via rg 'moment' and scan whether I’m missing something obvious. Consider that some configuration files for tools like Babel, eslint, or Jest may take module names directly. If you remove one of those dependencies, you’ll likely break something in your project.

Once you confirm that the package is not in use, remove it from package.json and run yarn. Repeat this process for each package in your dependencies and devDependencies. You can use a tool like depcheck to do this in a more automated fashion via npx depcheck, but the same caveats apply.

Keep dependencies up-to-date

Have you ever experienced “upgrade cliffs”? That’s when the version of a dependency is so far behind that upgrading takes significant effort and slows down entire engineering teams. I’ve found that making upgrading part of everyone’s ongoing responsibility amortizes this cost over time and across engineering organizations. With this approach, people will move slightly slower on average, but they will never face getting stuck because it is too hard to overcome breaking changes.

Keeping all your dependencies up-to-date will make it easier to move away from legacy packages and follow through with this guide’s subsequent steps. You can use yarn outdated and yarn upgrade-interactive to check for and upgrade your dependencies to their latest versions.5 However, be mindful of breaking changes and bugs – the more automated testing you have to increase your confidence, the closer you can stay on the latest version of your dependencies. There is nothing worse than upgrading a package for the sake of being on the latest versions without understanding the changes and causing an issue in production.

For example, I used to track Babel’s releases closely at Facebook by building a system to compare the new production bundles with the previous version for all of React Native’s product code (many millions of lines of code). It allowed me to ship updates with confidence, and in some cases, I managed to deploy a new version of Babel to a billion users within eight hours of its release.

Clean up duplicated packages

The algorithms used in some JavaScript package managers aren’t continuously optimizing the dependency graph. You likely have a lock file that’s responsible for installing multiple versions of the same package even though only a single version would equally satisfy the expected semantic versioning (semver) range. yarn-deduplicate can be used to optimize your lock file in those situations, and I generally recommend running it every time a package is added, updated, or removed: npx yarn-deduplicate yarn.lock. You can also add a verification step to your Continuous Integration (CI) pipeline like yarn-deduplicate yarn.lock --list --fail.

The tools that cause the most trouble with this problem are monorepo toolchains such as Babel or Jest. I worked on projects where we had four versions of Babel’s parser, two versions of almost all Babel plugins, and three versions of various Jest packages. yarn-deduplicate worked to some extent, but there was no good way to update all those packages to use the latest versions. I tried:

- Manually update all entries in all

package.jsonfiles to require the latest versions. - Use

yarn upgradeoryarn upgrade-interactive. - Use Yarn resolutions to overwrite semantic versioning constraints and lock all packages to their latest version.

None of these worked well and often made things worse. I’ve found only one reliable way to ensure only one version of each Babel package is installed in a project: On every Babel upgrade, manually remove all entries from the yarn.lock file starting with @babel/, which gives Yarn a chance to start over for a subset of its dependency graph. You’ll end up with a single version of each Babel package – the latest ones.6

Another suggestion is to explore the semver ranges of packages you depend on and send Pull Requests to upgrade their dependencies or change pinned versions to accept server ranges so that downstream projects can benefit from more de-duplication.

Align on a single package for a well-defined purpose

On a large project, you may end up with multiple packages serving the same purpose, and sometimes you may end up with multiple major versions of the same package. I have found that on larger teams, somebody may pull in a large dependency and use it exactly one time, bloat the production bundles, all while a similar and smaller package was already widely used. You can avoid this problem by using strict style guides, documentation, and code review. Still, even in the best environments, this is further exacerbated by similar transitive dependencies included via direct dependencies. For example, two of your direct dependencies may include the same package to parse command-line options that are different from the one your project is using. It may make sense to analyze which packages are already in your node_modules and align on a single package for each purpose.

Fork packages when necessary

In some cases, packages are unmaintained, or they are too bloated or moving too slowly. It doesn’t make sense to block a product feature at your company because you are waiting on a third-party open-source maintainer to make a release to a package you are using. I notice that people are hesitant to forking packages. I recommend being more liberal with the fork button on GitHub and publishing your custom versions of packages. Forks don’t have to be long-lived. They can serve a single purpose, like pulling forward a fix for something and can be removed later. Yes, that can add a maintenance burden, but it also places you in control of more of the code you are running in your projects.7

We can use Yarn resolutions to swap existing packages with a custom fork. In your package.json:

json

I previously did this with a popular package that ships 20 MiB of stuff when only about 2 MiB were necessary. We used to have three copies of this package. Aligning on a single version and moving to the fork reduced the space it occupied from 60 MiB to 2 MiB.

Usually, after forking a package, I will send a Pull Request to the original package. For example, this improvement to remark-prism reduces the package’s size on disk from 10.7 MiB to 0.03 MiB. Most of the time, I intend to keep forks short-lived. Sometimes, changes can be at odds with the package owners, and I will keep the forked version, and that’s fine.

Track the number and size of dependencies

It’s great to reduce the number and size of dependencies once, but it’s best to sustain the wins long-term. I recommend setting up a CI step that analyzes the size of node_modules using something like du -sh node_modules within a project whenever yarn.lock or package.json files are changed. Make the CI step run on every Pull Request, and if the size increases compared to master, alert somebody to take a look.

While you can undoubtedly build automation to prevent people from checking in new modules, I’ve found that the best results can be achieved by talking to people and building a shared sense of responsibility across everyone working with the same codebase. After all, it’s tough to know whether there is a good reason to add a dependency or not, and you don’t want to end up being a gatekeeper. Instead, it’s helpful to point out that adding large dependencies will make things slower for everyone or point people to similar packages you are using already. Most of the time, it turns out that people just didn’t know better, and they will appreciate the help.

For example, I recently ran into a case where somebody added a few packages that would have doubled the size of a node_modules folder. Simply pointing it out, explaining why it isn’t ideal, and giving them two or three other ways to solve their problem helped avoid shipping the Pull Request in that state. Think about it this way: if somebody adds a hundred lines of code, we put that code through lots of scrutiny during code review. If somebody adds a single line to a package.json file that pulls 100 MiB of code into a project, bloats the production bundle, and slows down tooling, we usually just wink the change through because we don’t see the implications on the Pull Request itself.8 You could avoid this problem by checking third-party dependencies directly into version control.

Other Recommendations

Yarn has an autoclean command that can automatically remove files matching an exclusion list. You can use it to remove anything that’s not relevant to your projects, such as examples, tests, markdown files, and others. You can enable it by running yarn autoclean --init and checking the resulting .yarnclean file into version control. Unfortunately, this command runs after installing dependencies instead of during an install, meaning that every invocation of Yarn will be slower, sometimes even by multiple seconds. It’s an excellent feature, but I only recommend it for projects that check node_modules into version control.

To which degree the above suggestions will work for you will depend on your project and team. At the least, you’ll gain more control over your project and reduce the uncontrolled growth of third-party dependencies. At best, you’ll prevent workflows from regressing in performance and always stay lean and fast. Next up: Rethinking JavaScript Infrastructure.

Footnotes

-

The website you are on right now has more than 40,000 files in its

The website you are on right now has more than 40,000 files in its node_modulesfolder, weighing more than 570 MiB 😬 ↩ -

In my experience, install times grow at an above linear rate as the complexity of the dependency graph increases. Fewer packages will lead to faster install times, even if the packages are bigger. ↩

-

This is a convenient command to consider aliasing in your shell configuration ↩

-

prisma is great, but maybe it shouldn’t duplicate the same large binary across three folders? ↩

-

You may use Dependabot on GitHub but from my experience, people spend less time validating changes than if they do it manually. ↩

-

Unless, of course, you have dependencies that require a specific version, in which case it makes sense to upgrade those dependencies first or use Yarn resolutions to overwrite the constraints. ↩

-

For very small changes, you may prefer to use

patch-packageinstead. ↩ -

And

yarn.lockchanges are collapsed by default on GitHub 😩 ↩